Generic website pull request previews using S3 buckets

tl;dr: see the example repo and full code at the end of the post.

Pull request previews of websites are neat: they provide a direct way to inspect changes to a website before they are merged into the main branch. Yet setting up a CI/CD workflow that achieves this is not always trivial and depends on the specific CI/CD provider. This might be the main reason why people rely on Netlify for this task. Netlify does exactly this: it builds a website for each pull request and provides a link to the preview. It is easy to set up as it only requires linking a GitHub repository to Netlify. As long as the repository is public and one is okay with all other restrictions, this is a great solution. Yet, this won’t work for private repositories and repositories which do not live on GitHub.

There are some GitHub actions that attempt to achieve the same thing:

There also seems to be some discussion going on whether GitHub could provide this functionality built into GitHub Pages directly.

The actions above are great, but suffer both from the fact that they are GitHub-specific. In addition, the second one hasn’t been updated in two years and makes use of a “complicated” TypeScript and JavaScript combination to achieve the desired result.

All in all, there is a clear need for a generic and slim solution to this problem. This post describes how to set up a generic pull request preview system for a website using S3 buckets. While the solutions uses AWS S3 buckets, any other object storage system which offers a website endpoint will work. In addition, I’ve used Drone CI for the example repo here as it is a free and self-hosted CI provider which is also often used with Gitea. Note however that lately many people are transitioning to Woodpecker or the upcoming built-in actions of Gitea 1.19.

Implementation details

To make the implementation generic, i.e. usable within any CI/CD provider, it focuses on using shell commands and minimal alpine images and avoids relying on version-specific Node.js/JS/Typescript solutions.

The workflow can be broken down into the following parts:

- Checkout code

- Build website

- Create S3 bucket with the static website endpoint enabled

- Deploy the public part of the website to the bucket

- Use a webhook/API call to the PR to add a comment containing the preview URL

- (optional) Delete the S3 bucket once the PR is merged/close

Images used

The workflow relies on the following images:

klakegg/hugo:alpine-ci(~ 230 MB)alpine:latest(~ 3 MB)plugins/s3-sync(~ 7 MB)byrnedo/alpine-curl(~ 3 MB)

If your website is using a different framework than Hugo, just replace it with an appropriate slim image (and adjust your build logic).

I am aware that there are also official images for awscli, yet these are huge for no added value.

Hence, using alpine and just installing awscli on the fly seems a more minimal approach.

S3 bucket creation

When creating the bucket, I had to append || /bin/true to all calls.

This essentially lets the step succeed every time, even if the command failed.

This is needed as there is sadly no simple “skip if the bucket exists” flag.

And I did not want to add more code that first checks if the bucket exists and then conditionally creates the bucket.

In the end, always succeeding the step isn’t a blocker here.

And if issues arise during bucket creation due to permissions, these are still logged.

The only downside is that this might be a bit hidden as the step is marked as “succeeded” whereas it actually really failed.

S3 bucket sync

The files are synced with public-read ACL permissions, meaning that the files can be read by anyone out there.

If you have sensitive content somewhere, you might want to think twice if you want to use this approach as is.

You can, of course, adapt the ACL and other file permissions as needed, so that only people with the appropriate permissions can view the content (e.g. IP-restricted).

Yet in 99% of all cases I’d say this is not needed and the website is public anyhow (because we’re talking about website previews here, right?).

Add preview URL comment in pull request

This step is actually somewhat non-generic and Gitea specific.

I am using a call to the Gitea API endpoint issues/<pr number>/comments.

Other CI providers might have easier ways to accomplish this. Yet using a plain API should (hopefully) also be quite stable :-)

For this step, there is actually a bit of complex conditional logic taking place: it is checked whether a comment already exists to avoid creating a new one on each update of the PR.

The idea is: add the comment containing the preview URL at the top/beginning of the PR and - that’s it.

Cleaning up

The last step is to clean up, i.e. deleting the bucket again after the preview is not needed anymore. For this, we need to know when this happens, i.e. an event trigger that only executes when a PR is closed/merged. There are such webhook notifications from both GitHub and Gitea. Yet, unfortunately, Drone CI actively ignores these webhooks at the moment. This means that right now there is no way to automatically clean up if Drone CI is used. However, if you’re using a different CI provider, you might already be able to do so right now. I will probably update this post once we’ve switched to Woodpecker CI or the internal Gitea actions.

Required tokens and security

To make everything work, the workflow requires the following tokens:

- AWS key pair with permissions to create S3 buckets, upload files and delete buckets

- A Gitea token with repo scopes to add comments to the PR

I highly recommend using tokens with minimal scope here.

The tokens must be allowed in pull request builds (obviously) which means that any pull request can perform the allowed operations on both the allowed S3 buckets and the git repo.

Hence, in addition to using tokens with minimal scopes, it should be ensured that pull requests from arbitrary forks do not run by default (and only if permission is granted).

Also, you should check upfront if any pull request is possibly aiming to expose these tokens within the build logs, i.e., by using echo $AWS_ACCESS_KEY_ID or similar.

The JSON below shows the minimum required permissions for the AWS key pair. These are also limited to a specific regex pattern to avoid triggering actions on other buckets while still allowing for some flexibility in the bucket naming.

In case you might be wondering: is using a http address a problem? No, it’s not, because you know where it’s coming from. If you do not like it and want to have an https one no matter what, you need to add a CDN (e.g. CloudFront) in front of the S3 bucket and change the code to copy the Cloudfront URL instead of the S3 website endpoint. Note that this will expand the workflow quit a bit and also come with additional costs.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketWebsite",

"s3:GetBucketAcl",

"s3:GetBucketPolicy",

"s3:GetBucketLocation",

"s3:GetObjectAcl",

"s3:GetObject",

"s3:CreateBucket",

"s3:PutObject",

"s3:PutBucketWebsite",

"s3:PutBucketAcl",

"s3:PutObjectAcl"

"s3:DeleteObject",

],

"Resource": ["arn:aws:s3:::preview-*", "arn:aws:s3:::preview-*/"]

}

]

}

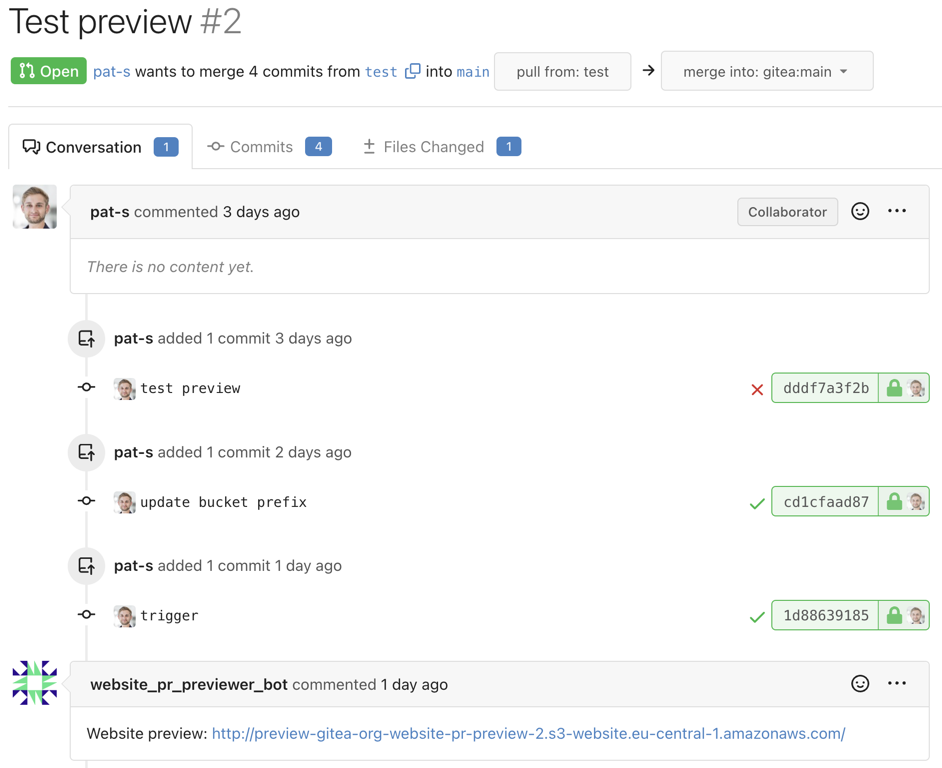

Preview

And finally, here is a preview of the final result:

Assets

Example repo: https://gitea.com/gitea/website-pr-preview

Drone CI YAML file

---

kind: pipeline

type: docker

name: Build and deploy website

platform:

os: linux

arch: amd64

trigger:

event:

- pull_request

- push

steps:

- name: build website

pull: always

image: klakegg/hugo:alpine-ci

commands:

- apk add git

- git submodule update --init --recursive

- hugo

- name: '[PR] create s3 bucket'

when:

event:

- pull_request

pull: always

image: alpine:latest

environment:

AWS_ACCESS_KEY_ID:

from_secret: preview_aws_access_token

AWS_SECRET_ACCESS_KEY:

from_secret: preview_aws_secret_access_key

AWS_REGION: eu-central-1

commands:

- apk add --no-cache aws-cli

- aws s3api create-bucket --acl public-read --bucket preview-gitea-org-${DRONE_REPO_NAME}-${DRONE_PULL_REQUEST} --region $${AWS_REGION} --create-bucket-configuration LocationConstraint=$${AWS_REGION} || /bin/true

- aws s3 website s3://preview-gitea-org-${DRONE_REPO_NAME}-${DRONE_PULL_REQUEST}/ --index-document index.html --error-document error.html || /bin/true

- name: '[PR] deploy website to S3 bucket'

when:

event:

- pull_request

pull: always

image: plugins/s3-sync

environment:

AWS_ACCESS_KEY_ID:

from_secret: preview_aws_access_token

AWS_SECRET_ACCESS_KEY:

from_secret: preview_aws_secret_access_key

AWS_REGION: eu-central-1

settings:

source: public/

target: /

region: eu-central-1

bucket: preview-gitea-org-${DRONE_REPO_NAME}-${DRONE_PULL_REQUEST}

acl: public-read

- name: '[PR] Post comment to PR'

when:

event:

- pull_request

image: byrnedo/alpine-curl

environment:

GITEA_TOKEN:

from_secret: access_token

AWS_REGION: eu-central-1

commands:

# approach: check if comment already exists to prevent spamming in future runs

- 'COMMENTS=$(curl -sL -X GET -H "Authorization: token $GITEA_TOKEN" https://gitea.com/api/v1/repos/${DRONE_REPO_OWNER}/${DRONE_REPO_NAME}/issues/${DRONE_PULL_REQUEST}/comments)'

- 'if [[ $COMMENTS == "[]" ]]; then curl -sL -X POST -H "Authorization: token $GITEA_TOKEN" -H "Content-type: application/json" https://gitea.com/api/v1/repos/${DRONE_REPO_OWNER}/${DRONE_REPO_NAME}/issues/${DRONE_PULL_REQUEST}/comments -d "{\"body\": \"Website preview: http://preview-gitea-org-${DRONE_REPO_NAME}-${DRONE_PULL_REQUEST}.s3-website.$${AWS_REGION}.amazonaws.com/\"}"; else echo -e "\n INFO: Comment already exist, doing nothing"; fi'

### NB: not working as of 2023-02-06 due to Drone ignoring the Gitea webhook for PR close events: https://community.harness.io/t/closing-pull-request/13205

# - name: "[PR] Delete S3 bucket after closing PR"

# image: byrnedo/alpine-curl

# environment:

# preview_aws_access_token:

# from_secret: preview_aws_access_token

# preview_aws_secret_access_key:

# from_secret: preview_aws_secret_access_key

# AWS_REGION: eu-central-1

# commands:

# - apk add --no-cache jq

# # check if PR got closed

# - "PR_STATE=$(curl https://gitea.com/api/v1/repos/${DRONE_REPO_OWNER}/${DRONE_REPO_NAME}/pulls/${DRONE_PULL_REQUEST} | jq -r .state)"

# # delete S3 if PR is closed

# - "if [[ $PR_STATE == 'closed' ]]; then aws s3 rb s3://preview-gitea-org-${DRONE_REPO_NAME}-${DRONE_PULL_REQUEST} --force; else echo -e '\n INFO: PR not in state closed, doing nothing'; fi"

volumes:

- name: cache

temp: {}